On October 17, 2012, the Internet Movie Database (IMDB) will turn 22 years old. Over those 22 years, it’s become one of the most visited websites in the world (somewhere in between Facebook and the New York Times, according to Alexa.com) on the strength of both its factual movie information as well as the crowdsourced movie ratings that power its Top 250 movies list. Millions upon millions of votes have given these ratings and the Top 250 list an air of authority that most of us take for granted.

On October 17, 2012, the Internet Movie Database (IMDB) will turn 22 years old. Over those 22 years, it’s become one of the most visited websites in the world (somewhere in between Facebook and the New York Times, according to Alexa.com) on the strength of both its factual movie information as well as the crowdsourced movie ratings that power its Top 250 movies list. Millions upon millions of votes have given these ratings and the Top 250 list an air of authority that most of us take for granted.

Most of us, that is, except for OverthinkingIt.com. Over the past five years, I’ve been conducting an annual statistical review of the Top 250 movies list that attempts to suss out trends and possible biases of the list based on changes to its composition over time.

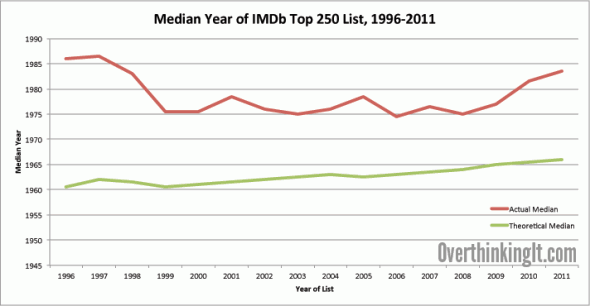

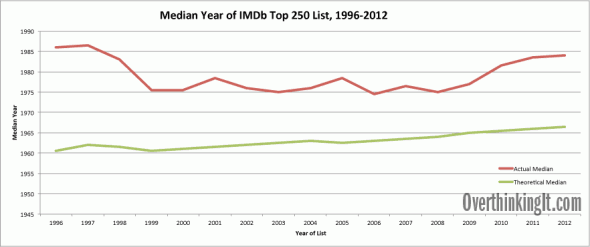

In previous editions of this study, we’ve found that the IMDB list is skewed towards newer movies compared to a list generated by industry professionals: the IMDB Top 250 list from 2008 had a median year of 1975, while the American Film Institute’s list of top 100 films from 2007 had a median year of 1964.5. But we’ve also found that, when looking at changes to the composition of the IMDB Top 250 list from 1996-2011, there is no discernable internal trend towards favoring newer movies at rate faster than what you’d assume from the linear passage of time:

There does seem to be a recent uptick from 2008-2011, but given the up-and-down history of the years prior to 2008, it’s far too early to say there’s a real trend here.

But enough of this recap. Let’s add the 2012 data and see what the Overthinking It IMDb Top 250 Movies List Analysis, 5th Edition, has in store.

The 2012 List

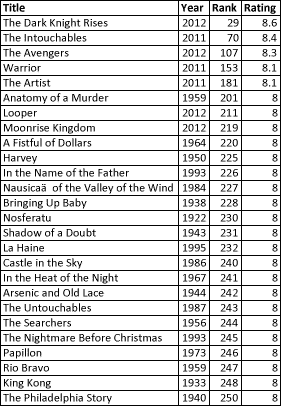

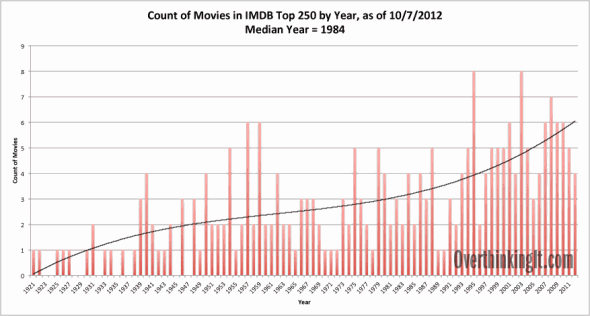

We obviously can’t get at trends when looking at a single year of the list, but let’s pause for a moment and consider what IMDB users are calling the best movies of all time as of October 7, 2012:

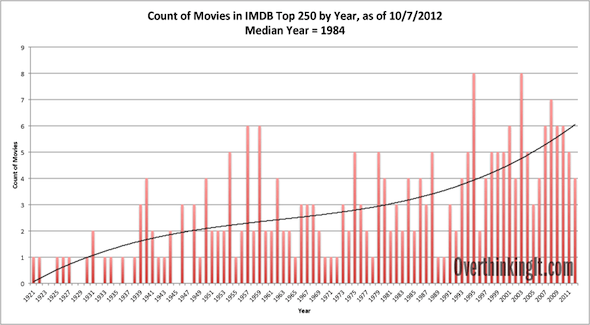

- The median year of the list is 1984. Quick statistics refresher: that means that half of the movies on the list are from before 1984; the other half are after 1984. So yes, the list is still heavily weighted towards newer movies.

- The two best years for movies, according to this list, were 1995 and 2003. Those years had 8 movies that made the Top 250.

- The Shawshank Redemption currently sits atop the list with a 9.2 rating. The Godfather, also with a 9.2 rating, is at number 2.

- 4 movies from 2012 have already found their way onto the list: The Dark Knight Rises, The Avengers, Looper, and Moonrise Kingdom.

The 1996-2012 Trend

The short answer is no:

In 2011, the median year of the list was 1983.5. In 2012, after one year, the median had only moved up 0.5 years, to 1984. As I mentioned before, the changes in the list from 2008-2011 seemed to suggest an increasing lean towards new movies, but the change from 2011 to 2012 belies such a trend.

(It’s worth noting that IMDB recently changed the formula used to determine which movies are eligible for the Top 250 list; the threshold for minimum votes to be eligible shot up to 25,000 from a mere 3,000. But the exact effect on the list’s composition is hard to judge, and in general, we don’t have great insight into this and other changes to the algorithm on the list’s composition over time, so I’m setting this recent change aside for the purpose of this analysis. Feel free to debate its effect in the comments.)

“Too Soon Movies”

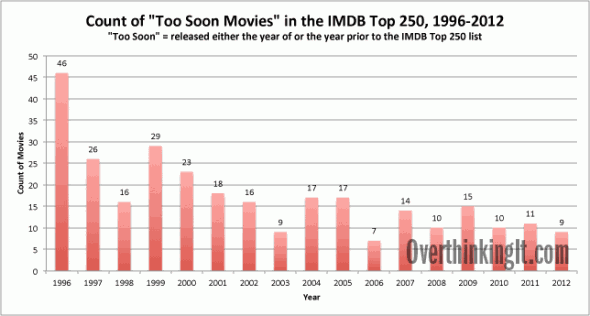

Here’s another way of looking at the list and its bias towards newer movies (or lack thereof). It seems a little strange that a movie that just came out a few months ago (or weeks, as in the case of Looper at the time of this writing) can so quickly be given a spot in the pantheon of the Top 250 list. These occurrences are often used to criticize the legitimacy of the list.

Let’s look for a trend in the prevalence of movies that seem a little “too soon” to be included on the IMDB Top 250. For each list from 1996-2012, I counted the number of movies on the list that were released either the same year of the list or the year prior to the list:

There appears to be a downward trend in the prevalence of these “too soon” movies. Also, remember that plenty of these “too soon” eventually drop off the list, so there’s an additional correction process that we don’t see reflected by looking at either this trend or just by looking at any given snapshot of the list.

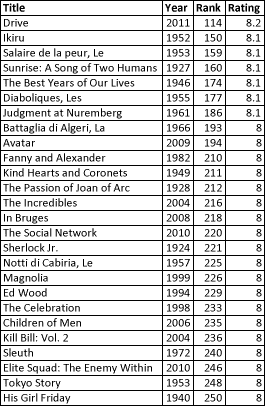

With that in mind, let’s take a look at the new additions to the 2012 list and the drop-offs from the 2011 list.

Additions for 2012

There are 26 movies on the 2012 snapshot of the list that weren’t on the 2011 snapshot. As I previously mentioned, four are from 2012. The rest are spread out between the years 2011 and 1922:

In previous editions, I had separately called out the removal of King Kong and Nosferatu from the list as sad casualties of its relentless forward march, so it’s nice to see these classic monsters of cinema return to some form of prominence.

Removals from 2011

With 26 additions, of course, comes 26 removals:

Some of us on this site may be glad to see that Avatar is no longer on the list.

Conclusion

While writing this post, I went back and looked over the previous four editions in this series and the numerous comments that people have left for each installment. I’m afraid that this exercise has stagnated a bit, given our limited insight into the factors that affect the ratings and the lack of surprising or counterintuitive findings in the data. More importantly, we keep coming back to the dead end that is the idea of applying a single quantitative rating to a movie and ranking those movies based on their ratings. It’s reductive, and it’s antithetical to the very nature of Overthinking It. Sure, the IMDB Top 250 list is important due to its popularity, and it’s valuable to gain a deeper understanding of its nature, but I think we’ve gone deep enough over the course of these five editions.

That being said: I am open to entertaining new ideas for this study, and if something compelling comes up, then there just may be a sixth edition in the cards. So let me know what you think in the comments!

[Update: here’s my raw data for you number-crunching types.]

“The median year of the list is 1984. … So yes, the list is still heavily weighted towards newer movies.”

What fraction of movies were made after 1984? I suspect that 1984 is pretty close to the median release age of all movies, even if it’s not particularly close to the middle of the time period in which movies have existed.

This Wikipedia article indicates that the rate that big studios release movies has decreased over time:

http://en.wikipedia.org/wiki/Major_film_studio

“During the 1930s, the eight majors averaged a total of 358 feature film releases a year; in the 1940s, the four largest companies shifted more of their resources toward high-budget productions and away from B movies, bringing the yearly average down to 288 for the decade.”

…

“The six primary studio subsidiaries alone put out a total of 124 films during 2006; the three largest secondary subsidiaries (New Line, Fox Searchlight, and Focus Features) accounted for another 30.”

Granted, major studios and indies only create a subset of the movies that might wind up on the IMDB Top 250, but they probably account for the vast majority.

Wow, I stand quite thoroughly corrected. I could make some arguments to chip away at that difference, maybe, but they wouldn’t even come close to explaining it away entirely. I guess way more movies per person were sustainable before television.

I know this is more a place for discussing trends rather than being contentious over specific exclusions, but…

Looper was a perfectly fine movie, but there’s no way it belongs on the Top 250 chart! Especially at the loss of films like Drive, Children of Men, or His Girl Friday? I suppose in a matter of months it will have corrected itself, though.

I don’t know what to add to the focus of the study beyond “please do another next year!”

Here’s an idea: try to measure the recency bias on IMDB ratings. In other words, how much of a boost does a movie get because it just came out? One way to do this would be to look at where movies rank when they’re recent and see where they eventually settle. of course, I’m sure dropoff isn’t uniform — some movies are excellent, and everyone knows it, and they get a good ranking and keep it. Some maybe start slow and pick up credit later. Are there any patterns? I bet “blockbusters” tend to be among the ones that are overrated when recent, particularly effects-driven blockbusters — whatever comes out next year will probably eclipse the technical wizardry in this year’s blockbuster.

Recency bias may have multiple causes. One is that we may overrate something we just saw, especially if it is large and impressive (Avatar, Avengers). Another is that probably more recent movies are likely to be seen by more people (though I guess that wouldn’t necessarily increase average rating). Another is that older movies use different conventions of communication that just don’t appeal any more. Not clear which of those things should be counted as “bias”.

Of course, once you’ve measured recency bias, you can compute a “true” top 250. And then we can argue about what’s on it.

Hopefully neither Looper nor Drive!

I’m sure you meant “Hopefully neither Looper nor The Dark Knight Rises”, right?

I did not. I found Drive incredibly disappointing (not bad, but it fell well short of being the movie it wanted to be). Haven’t seen Looper. Dark Knight Rises was not bad, but I don’t foresee a lot of people feeling like they just need to see it again in 5-10 years.

But then I think the best movie ever made is Seven Samurai, so I’m clearly not in the mainstream.

Seven Samurai is currently at number 17 in the imdb top 250 – I rather think that does put you in the mainstream!

Yeah I know. But I think it’s been much higher, like #3. I may be thinking of something other than the IMDB 250 though.

Another issue is issue. :)

When did this come out on home media, especially for the “classics”? Some movies are practically a new release since they have been stuck in the vault for at least generations of movie goers. Take The Game for instance. Highly regarded but just now showing up in wide release on Criterion. It might show up on next year’s list even though it’s 20 years old and that has to skew the stats.

It would be intersting if the top 250 could be correlated to B.O. and release dates. Will Jaws move up?

Do you have all the lists from 1991 to today? I’d love to see the intersection. That might help with some of the recency bias (though probably not all of it).

Or, even better, a union list, sorted by the number of times a move has appeared on the list.

I’ve uploaded the raw data from 1996-2011, albeit as separate lists. It’ll take some time to merge all of them into one. Also note that naming conventions for movies changed at some point, so watch out for foreign films that appear as both their English and native titles.

http://www.overthinkingit.com/wp-content/uploads/2012/10/imdb-250-1996-2012-lists-only.xlsx

And then we could probably create an index of ranks, with a higher weight on movies that appeared in the early ratings. This could neutralize the newness factor a bit.

To me the “Median year” it’s an hollow data: every year newer movies come out, some are so good that make themselves to the list (by hype or sheer quality) but there are only two ways to add a new “old movie” to the list: or a dusty old film is discovered (very rare) or it is hyped to new glory thanks to a new release or by a link/remake from a more recent movie.

Either way it’s not a parameter of quality or “internet’s taste”, it’s simply a crowd hype response.

Plus, and I suppose this was debated before, IMDB got a very tight demographic: american-based interner users with time to spend into discussing their passion; and discussion requires always newer topics so, more recent/controversial movies are overhyped at the release then quickly moved on.

Just compare “The Avengers” vs “The Dark Knight Rises” (both 2012 superheroes entries) the first is simply a good funny movie, the second it’s way worse but generated more hype thanks to all its “gritty realism/”end of a trilogy”/”weird speech”/”clumsy occupy Wall-Street” controversies.

IMHO next year “Rises” will be in a much lower position while “The Avengers” will be in an higher place simply because it became the new “Star Wars”: the geeky masterpiece against which every new superheroes/fantasy/sci-fi movie will be compared.

Seeing these has got me thinking, what would a graph look like if you counted movies not by their release date, but the year the movie is set in, only trend I can predict is a spike in the early 1940’s. I’m not exactly a skilled data gatherer/graph maker, but I think I’m going to give this a shot.

Fascinating idea, but it’ll be a bit complicated to execute.

Some movies can’t be pinned down to a year. E.g., Lord of the Rings, Star Wars.

Some movies have more than one year for its setting. E.g., Godfather II.

Some movies take place over a very long period of time. E.g., Forrest Gump.

Some movies are just set in a generic present. E.g., The Hangover.

I suppose you could toss out ambiguous cases and have a movie like Godfather II take up two rows in the dataset.

Yeah, I’m working on gathering the data now, I think I’m going generalize it to decades and see what that looks like. I’ve already thrown out Star Wars/Lord of the Rings which, if anything, could only be regulated to “way before anything else on the list”; not sure which way I’ll go with films that span decades yet, I could count them twice, split up into fractions, or just use only the start or end dates.

Still a lot of guess work involved, if no time frame is stated and no strong evidence to the contrary, I’m assuming the year of release.

If you had a ludicrous amount of time to spend on it, you could divide movies with definite years up to those years based on running time spent in each year. You could divide movies with indefinite years up to those years they could take place in, spreading it out evenly over the possible range. For example, Schindler’s List might contribute 0.2 to each year from 1939 and 1945, 0.11 to each of the five years in between, and 0.05 to 1998.

…so yeah, grouping by decade is probably a good idea.

On a more practical note, separate bins for “indefinite extreme past,” “indefinite extreme future,” and “unknown or outside of time” would probably be quite manageable.

I’d be curious to see how these movies rake based on the age of the voters. I get the feeling few people over the age of 40 are ever voting on IMDB. I am 25 and I have plenty of peers who won’t even watch a black & white film…which might explain why ED Wood is ranked so low despite be one of the best Tim Burton films.

Meh, I think I said on the other posts about this: The idea of quantifying something as subjective as “good” is kind of… ridiculous? I get a lot of flack from my fellow political “scientists” for this discomfort with quantitative analysis, but really, just look at the comments above and disagreements over TDKR and people mentioning stuff that should/shouldn’t be on the list. So questioning the “accuracy” of the list is silly and will lead to lots of agreeing to disagree, at best. Operationalization is in itself a subjective process. Quibbling about numbers and statistical significance distracts from deeper questions.

I’m more interested at looking for particular themes in films that dominate the list, or if directors or writers frequently make it, or films starring specific people. For example, two films by Hayao Miyazaki just were added. Whereas, as Clayshuldt noted, Ed Wood is no longer on the list, but The Nightmare Before Christmas just got bumped up. Or where are all the documentaries? And there are two films featuring Tom Hardy on there now. I’m more interested in figuring out what causes moves like those, rather than what’s on the list per se. And those are qualitative questions, not quantitative. And I’m sure they relate to some of the things already discussed in the comments, plus lots of other things.

Such as, overall trends in the kinds of movies people most want to see at a given time will most definitely influence the results. And definitely the demographic characteristics of who is voting.